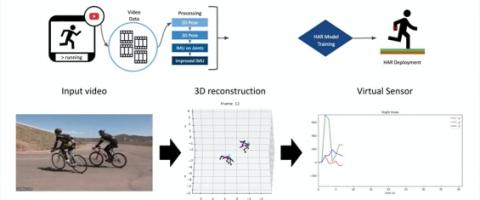

The lack of large-scale, labeled data sets impedes progress in developing robust and generalized predictive models for on-body sensor-based human activity recognition (HAR). Labeled data in human activity recognition is scarce and hard to come by, as sensor data collection is expensive, and the annotation is time-consuming and error-prone. To address this problem, we introduce IMUTube, an automated processing pipeline that integrates existing computer vision and signal processing techniques to convert videos of human activity into virtual streams of IMU data.

Project Name

Faculty Lead(s)

Gregory D. Abowd, Thomas Ploetz

Student Name(s)

Hyeokhyen Kwon

Main Contact

N/A

Lab Name

Ubiquitous Computing Group

Video Title

IMUTube: Automatic Extraction of Virtual on-body Accelerometry from Video for Human Activity Recognition.

Video URL

[VIDEO::https://www.youtube.com/watch?v=waWV-AGtiAk]